Harassment is something women face every day, a reality we wish didn’t exist, yet it continues to shape lived experiences across generations. For young women like Anuhya, it isn’t an abstract statistic. It’s personal. It’s real. And it’s precisely what inspired her and her teammates, Pranav and Aditya, to ask a bold question:

“How can we turn our experiences into something that actually combats harassment?”

In a world where AI is reshaping everything from job hunts to creative work this Gen Z trio is challenging the idea that innovation has to be detached from humanity. In fact, their work proves the opposite: the most impactful technology begins with empathy.

Meet the Builders

The three teamed up for a hackathon at Amrita University, but what they built went far beyond a project submission.

Harassment isn’t just a women’s issue, it's everybody’s issue. Yet many bystanders feel unsure about stepping in, whether due to fear, social norms, or lack of guidance.

Their Solution

Gender issues are vast, but where do you even begin? The team took a grounded approach: they reached out to NGOs working on the frontlines. That’s how they connected with Durga, an organization training bystanders, shopkeepers, auto drivers, and community members to intervene during harassment.

Durga’s challenge sparked an idea:

What if bystander training could exist in the form of a single, simple phone call?

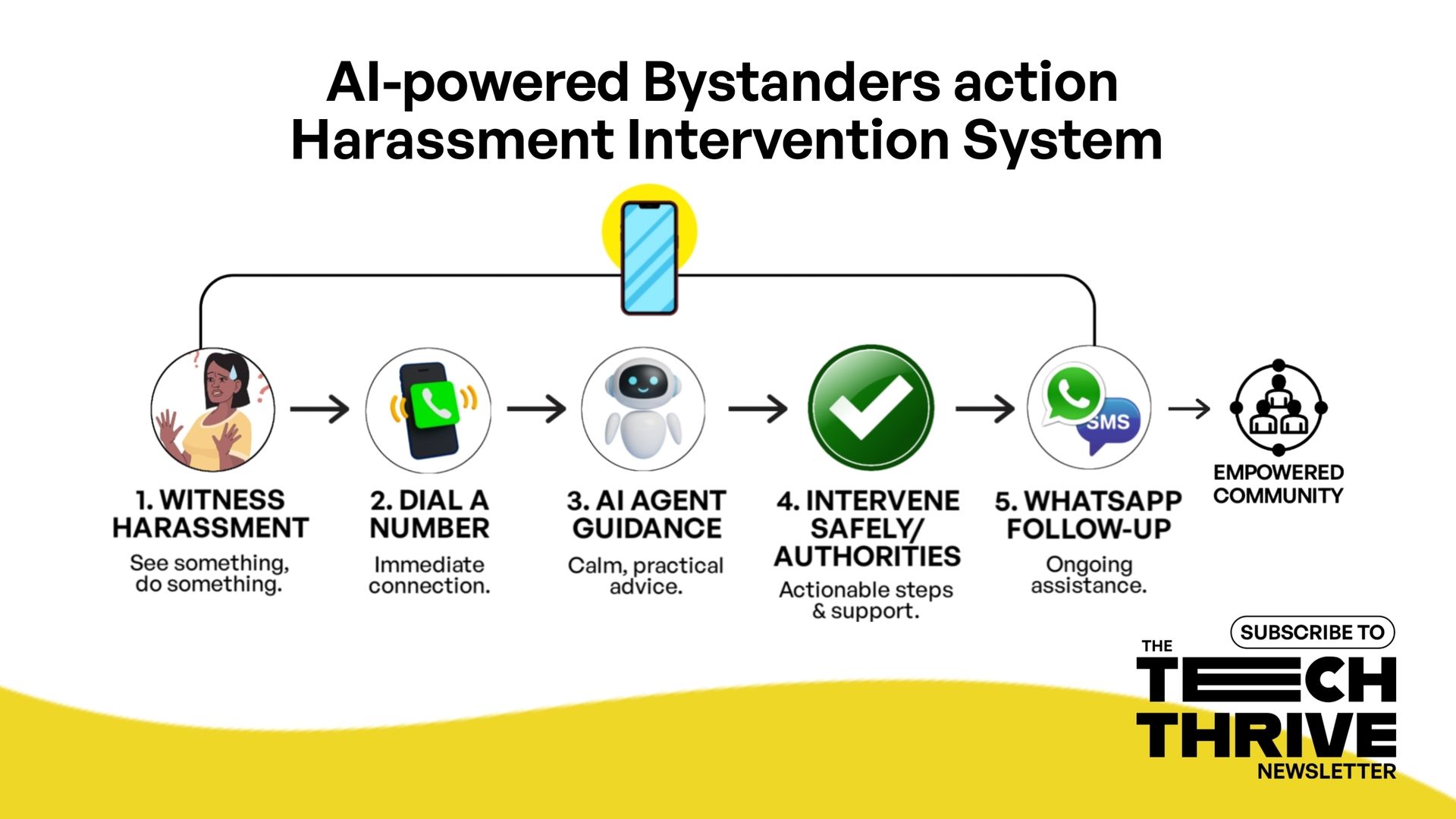

The team created an AI-powered caller system to enable quick, frictionless bystander action:

Witness harassment.

Dial a phone number.

An AI agent immediately guides you through the situation.

Get practical steps to intervene safely.

Connect with relevant authorities.

Continue the conversation seamlessly over WhatsApp.

A frictionless, rapid-response support system designed not for victims alone, but for bystanders, the people who often freeze because “it’s not my place to intervene.

Harassment isn’t always violent. Sometimes it’s the subtle comments, boundary-crossing behavior, or microaggressions that unfold even in “safe” spaces. And stepping in especially for strangers can feel intimidating.

It’s much easier to stand up for your friends. But how do you create a sense of collective responsibility for someone you don’t know?

Intersectionality in Design: Why Diverse Teams Matter

Working with Indian communities taught Aditya something fundamental:

Women’s experiences aren’t monolithic. Caste, class, socioeconomic background all add layers to how harassment shows up and how support systems respond.That’s why inclusive design isn’t optional. It’s essential.

If we don’t ask who this tool is speaking to and who created it we risk building a product that works for no one.

The team constantly checked perspectives, questioned assumptions, and leaned on each other’s lived experiences. As Aditya put it, listening was the real design process.

For Anuya, this wasn’t just an academic exercise. Her lived experiences gave the team an internal compass and also a reminder: Harassment is not a women’s issue. It’s an everybody issue.

I’ve faced harassment. My friends have faced it. I kept asking myself "would this tool have helped me at that moment?

How the Work Changed the Men on the Team

Both Aditya and Pranav admitted something powerful: working on the project changed them. Pranav shared that he didn’t realize the massive difference bystanders could make. Now, he feels a responsibility to act empowered by knowledge rather than fear.

Aditya described the empathy he developed simply by truly listening to the women around him.

At the end of the day, human energy and human agency is unmatched. AI can assist, but we still make things happen.

What they brings to this AI conversation is not just technical skill but empathy, accountability, and a refusal to accept “this is just how things are.”Their work shows that the future of ethical AI won’t be built in isolation.

It will be built by:

Women who’ve lived the problem

Men willing to question their assumptions

Engineers committed to scalability and responsibility

Designers advocating for inclusion for all

And most importantly by people who believe that technology should serve humanity, not the other way around.

This conversation isn’t just about technology, it's about human agency, empathy, and responsibility. By combining AI with design thinking and ethical reflection, these young innovators are shaping a safer, more inclusive future.

Takeaways from the Conversation:

Harassment intersects with class, caste, and socioeconomic differences, and technology solutions need to account for these layers.

Gender-diverse teams lead to better tech, Perspectives from both men and women were crucial in designing a tool sensitive to real human experiences.

AI is a catalyst, not a replacement, AI can assist in empathy-driven solutions but cannot fully understand human emotions or replace human judgment.

Environmental and ethical responsibility, Building AI comes with high energy costs and ethical trade-offs, which the team actively considers in their design process.

Despite concerns about AI’s impact on jobs, access to technology allows Gen Z to innovate, adapt, and focus on solving meaningful problems.

Listen to the full episode to explore how these students are reshaping the conversation with perspectives on culture, human-centered design, and responsible innovation that traditional tech leadership often overlooks.

About the Guests:

Pranav Sridhar, AI Engineer, IIT Madras & Sarvam AI: Focused on building AI systems that scale responsibly across India.

Anuhya Mahesh, Creative Education Student, Srishti School of Art, Design & Technology: Passionate about designing learning experiences and human-centered tech.

Aditya Sonwane, Design Expert, Srishti: Works on accessible and inclusive design solutions for diverse communities.

And hey, if this sparked an idea or made you think differently, share it with someone who needs to hear it. 💬✨

Your feedback helps us shape future conversations. I’d love to hear from you. Drop me a note, suggestion, or just say hi right here. 📨